这是pytorch官网的示例,记录训练GAN生成牙刷的过程,最终生成器生成牙刷的图像已经可以比较好了。

from __future__ import print_function

#%matplotlib inline

import argparse

import os

import random

import torch

import torch.nn as nn

import torch.nn.parallel

import torch.backends.cudnn as cudnn

import torch.optim as optim

import torch.utils.data

import torchvision.datasets as dset

import torchvision.transforms as transforms

import torchvision.utils as vutils

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.animation as animation

from IPython.display import HTML

# Set random seed for reproducibility

manualSeed = 999

#manualSeed = random.randint(1, 10000) # use if you want new results

print("Random Seed: ", manualSeed)

random.seed(manualSeed)

torch.manual_seed(manualSeed)

定义一些参数

# Root directory for dataset

dataroot = "data/celeba"

# Number of workers for dataloader

workers = 2

# Batch size during training

batch_size = 8

# Spatial size of training images. All images will be resized to this

# size using a transformer.

image_size = 64

# Number of channels in the training images. For color images this is 3

nc = 3

# Size of z latent vector (i.e. size of generator input)

nz = 100

# Size of feature maps in generator

ngf = 64

# Size of feature maps in discriminator

ndf = 64

# Number of training epochs

num_epochs = 30

# Learning rate for optimizers

lr = 0.005

# Beta1 hyperparam for Adam optimizers

beta1 = 0.5

# Number of GPUs available. Use 0 for CPU mode.

ngpu = 1

数据加载类

class ChipDatasets(Dataset):

def __init__(self, root,image_transforms=None,size=(128,128)):

"""__init__ _summary_

Args:

root (str): 数据路径

transforms (_type_, optional): _description_. Defaults to None.

size (tuple, optional): size=(width,height). Defaults to (256,128).

"""

#初始化

self.root = root # root下面就是图片

self.image_paths = self.get_all_images(root)

self.image_transforms = image_transforms

self.label_transforms = transforms.Compose([transforms.ToPILImage(),transforms.ToTensor()])

self.h = size[1] # size=(width,height)

self.w = size[0] # size=(width,height)

def get_all_images(self,root):

image_externs = ["bmp","png","jpg","jpeg"]

image_paths = []

for item in os.listdir(root):

item_extern = item.rsplit(".",1)[-1]

if str(item_extern).lower() in image_externs:

image_paths.append(os.path.join(root,item))

return image_paths

def __len__(self):

return len(self.image_paths)

def __getitem__(self, idx):

image = cv.imread(self.image_paths[idx])

image = cv.cvtColor(image,cv.COLOR_BGR2RGB) # 为了tensorboard显示的时候正常所以采用RGB格式

#这里需要resize,因为用Dataloader加载的同一个batch里面的图片大小需要一样

h,w = image.shape[:2]

if h!= self.h or w!= self.w:

image = cv.resize(image,(self.w, self.h))

# prepare the input

# defect_image = self.create_defect_image(image) # 对输入数据加破损

defect_image = image #

defect_image = torch.tensor(defect_image)

defect_image_chw = defect_image.permute(2,0,1) # 从HWC转为CHW

if self.image_transforms is None:

input = self.label_transforms(defect_image_chw)

else:

input = self.image_transforms(defect_image_chw)

# prepare the label

label_tensor = torch.tensor(image)

label_tensor = label_tensor.permute(2,0,1)

label = self.label_transforms(label_tensor)

return input,label

创建dataset和dataloader

import importlib

import my_encoder

importlib.reload(my_encoder)

from my_encoder import AutoEncoder, DecoderStraight,Encoder,ChipDatasets,SSIM

# We can use an image folder dataset the way we have it setup.

# Create the dataset

# dataset = dset.ImageFolder(root=dataroot,

# transform=transforms.Compose([

# transforms.Resize(image_size),

# transforms.CenterCrop(image_size),

# transforms.ToTensor(),

# transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)),

# ]))

dataset = ChipDatasets(r"H:\imageData\MVTec\hazelnut\good",None,size=(64,64))

# Create the dataloader

dataloader = torch.utils.data.DataLoader(dataset, batch_size=batch_size,

shuffle=True, num_workers=workers)

# Decide which device we want to run on

device = torch.device("cuda:0" if (torch.cuda.is_available() and ngpu > 0) else "cpu")

# Plot some training images

real_batch = next(iter(dataloader))

plt.figure(figsize=(8,8))

plt.axis("off")

plt.title("Training Images")

plt.imshow(np.transpose(vutils.make_grid(real_batch[0].to(device)[:64], padding=2, normalize=True).cpu(),(1,2,0)))

模型权重的初始化函数

# custom weights initialization called on netG and netD

def weights_init(m):

classname = m.__class__.__name__

if classname.find('Conv') != -1:

nn.init.normal_(m.weight.data, 0.0, 0.02)

elif classname.find('BatchNorm') != -1:

nn.init.normal_(m.weight.data, 1.0, 0.02)

nn.init.constant_(m.bias.data, 0)

定义生成器

# Generator Code

class Generator(nn.Module):

def __init__(self, ngpu):

super(Generator, self).__init__()

self.ngpu = ngpu

self.main = nn.Sequential(

# input is Z, going into a convolution

nn.ConvTranspose2d( nz, ngf * 8, 4, 1, 0, bias=False),

nn.BatchNorm2d(ngf * 8),

nn.ReLU(True),

# state size. (ngf*8) x 4 x 4

nn.ConvTranspose2d(ngf * 8, ngf * 4, 4, 2, 1, bias=False),

nn.BatchNorm2d(ngf * 4),

nn.ReLU(True),

# state size. (ngf*4) x 8 x 8

nn.ConvTranspose2d( ngf * 4, ngf * 2, 4, 2, 1, bias=False),

nn.BatchNorm2d(ngf * 2),

nn.ReLU(True),

# state size. (ngf*2) x 16 x 16

nn.ConvTranspose2d( ngf * 2, ngf, 4, 2, 1, bias=False),

nn.BatchNorm2d(ngf),

nn.ReLU(True),

# state size. (ngf) x 32 x 32

nn.ConvTranspose2d( ngf, nc, 4, 2, 1, bias=False),

nn.Tanh()

# state size. (nc) x 64 x 64

)

def forward(self, input):

return self.main(input)

验证生成器是否正确

# Create the generator

netG = Generator(ngpu).to(device)

# Handle multi-gpu if desired

if (device.type == 'cuda') and (ngpu > 1):

netG = nn.DataParallel(netG, list(range(ngpu)))

# Apply the weights_init function to randomly initialize all weights

# to mean=0, stdev=0.02.

netG.apply(weights_init)

# Print the model

print(netG)

Generator(

(main): Sequential(

(0): ConvTranspose2d(100, 512, kernel_size=(4, 4), stride=(1, 1), bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): ConvTranspose2d(512, 256, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(4): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(5): ReLU(inplace=True)

(6): ConvTranspose2d(256, 128, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(7): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(8): ReLU(inplace=True)

(9): ConvTranspose2d(128, 64, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(10): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(11): ReLU(inplace=True)

(12): ConvTranspose2d(64, 3, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(13): Tanh()

)

)

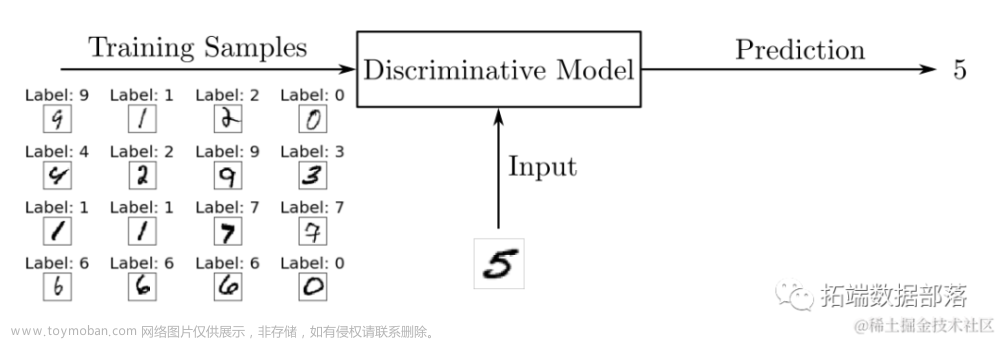

定义判别器

class Discriminator(nn.Module):

def __init__(self, ngpu):

super(Discriminator, self).__init__()

self.ngpu = ngpu

self.main = nn.Sequential(

# input is (nc) x 64 x 64

nn.Conv2d(nc, ndf, 4, 2, 1, bias=False),

nn.LeakyReLU(0.2, inplace=True),

# state size. (ndf) x 32 x 32

nn.Conv2d(ndf, ndf * 2, 4, 2, 1, bias=False),

nn.BatchNorm2d(ndf * 2),

nn.LeakyReLU(0.2, inplace=True),

# state size. (ndf*2) x 16 x 16

nn.Conv2d(ndf * 2, ndf * 4, 4, 2, 1, bias=False),

nn.BatchNorm2d(ndf * 4),

nn.LeakyReLU(0.2, inplace=True),

# state size. (ndf*4) x 8 x 8

nn.Conv2d(ndf * 4, ndf * 8, 4, 2, 1, bias=False),

nn.BatchNorm2d(ndf * 8),

nn.LeakyReLU(0.2, inplace=True),

# state size. (ndf*8) x 4 x 4

nn.Conv2d(ndf * 8, 1, 4, 1, 0, bias=False),

nn.Sigmoid()

)

def forward(self, input):

return self.main(input)

验证判别器是否正确

# Create the Discriminator

netD = Discriminator(ngpu).to(device)

# Handle multi-gpu if desired

if (device.type == 'cuda') and (ngpu > 1):

netD = nn.DataParallel(netD, list(range(ngpu)))

# Apply the weights_init function to randomly initialize all weights

# to mean=0, stdev=0.2.

netD.apply(weights_init)

# Print the model

print(netD)

Discriminator(

(main): Sequential(

(0): Conv2d(3, 64, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(1): LeakyReLU(negative_slope=0.2, inplace=True)

(2): Conv2d(64, 128, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(3): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): LeakyReLU(negative_slope=0.2, inplace=True)

(5): Conv2d(128, 256, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(6): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(7): LeakyReLU(negative_slope=0.2, inplace=True)

(8): Conv2d(256, 512, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(9): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(10): LeakyReLU(negative_slope=0.2, inplace=True)

(11): Conv2d(512, 1, kernel_size=(4, 4), stride=(1, 1), bias=False)

(12): Sigmoid()

)

)

定义损失函数

# Initialize BCELoss function

criterion = nn.BCELoss()

# Create batch of latent vectors that we will use to visualize

# the progression of the generator

fixed_noise = torch.randn(64, nz, 1, 1, device=device)

# Establish convention for real and fake labels during training

real_label = 1.

fake_label = 0.

训练

对GAN网络的训练是一个比较玄学的过程,当不收敛的时候调整学习率多尝试几次,或者动态的调整学习率。

# Training Loop

# Lists to keep track of progress

img_list = []

G_losses = []

D_losses = []

iters = 0

# Number of training epochs

num_epochs = 150

# Learning rate for optimizers

lr = 0.005

# Beta1 hyperparam for Adam optimizers

beta1 = 0.5

# Setup Adam optimizers for both G and D

optimizerD = optim.Adam(netD.parameters(), lr=lr, betas=(beta1, 0.999))

optimizerG = optim.Adam(netG.parameters(), lr=lr, betas=(beta1, 0.999))

print("Starting Training Loop...")

# For each epoch

for epoch in range(num_epochs):

# For each batch in the dataloader

for i, data in enumerate(dataloader, 0):

############################

# (1) Update D network: maximize log(D(x)) + log(1 - D(G(z)))

###########################

## Train with all-real batch

netD.zero_grad()

# Format batch

real_cpu = data[0].to(device)

b_size = real_cpu.size(0)

label = torch.full((b_size,), real_label, dtype=torch.float, device=device)

# Forward pass real batch through D

output = netD(real_cpu).view(-1)

# Calculate loss on all-real batch

errD_real = criterion(output, label)

# Calculate gradients for D in backward pass

errD_real.backward()

D_x = output.mean().item()

## Train with all-fake batch

# Generate batch of latent vectors

noise = torch.randn(b_size, nz, 1, 1, device=device)

# Generate fake image batch with G

fake = netG(noise)

label.fill_(fake_label)

# Classify all fake batch with D

output = netD(fake.detach()).view(-1)

# Calculate D's loss on the all-fake batch

errD_fake = criterion(output, label)

# Calculate the gradients for this batch, accumulated (summed) with previous gradients

errD_fake.backward()

D_G_z1 = output.mean().item()

# Compute error of D as sum over the fake and the real batches

errD = errD_real + errD_fake

# Update D

optimizerD.step()

############################

# (2) Update G network: maximize log(D(G(z)))

###########################

netG.zero_grad()

label.fill_(real_label) # fake labels are real for generator cost

# Since we just updated D, perform another forward pass of all-fake batch through D

output = netD(fake).view(-1)

# Calculate G's loss based on this output

errG = criterion(output, label)

# Calculate gradients for G

errG.backward()

D_G_z2 = output.mean().item()

# Update G

optimizerG.step()

# Output training stats

if i % 50 == 0:

print('[%d/%d][%d/%d]\tLoss_D: %.4f\tLoss_G: %.4f\tD(x): %.4f\tD(G(z)): %.4f / %.4f'

% (epoch, num_epochs, i, len(dataloader),

errD.item(), errG.item(), D_x, D_G_z1, D_G_z2))

# Save Losses for plotting later

G_losses.append(errG.item())

D_losses.append(errD.item())

# Check how the generator is doing by saving G's output on fixed_noise

if (iters % 100 == 0) or ((epoch == num_epochs-1) and (i == len(dataloader)-1)):

with torch.no_grad():

fake = netG(fixed_noise).detach().cpu()

img_list.append(vutils.make_grid(fake, padding=2, normalize=True))

iters += 1

Starting Training Loop...

[0/50][0/49] Loss_D: 1.0907 Loss_G: 29.8247 D(x): 0.4985 D(G(z)): 0.2701 / 0.0000

[1/50][0/49] Loss_D: 100.0000 Loss_G: 0.0000 D(x): 1.0000 D(G(z)): 1.0000 / 1.0000

[2/50][0/49] Loss_D: 100.0000 Loss_G: 0.0000 D(x): 1.0000 D(G(z)): 1.0000 / 1.0000

[3/50][0/49] Loss_D: 100.0000 Loss_G: 0.0000 D(x): 1.0000 D(G(z)): 1.0000 / 1.0000

[4/50][0/49] Loss_D: 100.0000 Loss_G: 0.0000 D(x): 1.0000 D(G(z)): 1.0000 / 1.0000

[5/50][0/49] Loss_D: 100.0000 Loss_G: 0.0000 D(x): 1.0000 D(G(z)): 1.0000 / 1.0000

[6/50][0/49] Loss_D: 100.0000 Loss_G: 0.0000 D(x): 1.0000 D(G(z)): 1.0000 / 1.0000

[7/50][0/49] Loss_D: 100.0000 Loss_G: 0.0000 D(x): 1.0000 D(G(z)): 1.0000 / 1.0000

[8/50][0/49] Loss_D: 100.0000 Loss_G: 0.0000 D(x): 1.0000 D(G(z)): 1.0000 / 1.0000

[9/50][0/49] Loss_D: 100.0000 Loss_G: 0.0000 D(x): 1.0000 D(G(z)): 1.0000 / 1.0000

[10/50][0/49] Loss_D: 100.0000 Loss_G: 0.0000 D(x): 1.0000 D(G(z)): 1.0000 / 1.0000

[11/50][0/49] Loss_D: 100.0000 Loss_G: 0.0000 D(x): 1.0000 D(G(z)): 1.0000 / 1.0000

输入一个随机值查看GAN生成器生成的效果

noise = torch.randn(b_size, nz, 1, 1, device=device)

fake = netG(noise).detach()

plt.imshow(np.transpose(vutils.make_grid(fake[0].to(device)[:64], padding=5, normalize=True).cpu(),(1,2,0)))

查看真实样本中图片

real_batch = next(iter(dataloader))

plt.imshow(np.transpose(vutils.make_grid(real_batch[0][2].to(device)[:64], padding=5, normalize=True).cpu(),(1,2,0)))

查看训练过程中生成器和判别器的损失

plt.figure(figsize=(10,5))

plt.title("Generator and Discriminator Loss During Training")

plt.plot(G_losses,label="G")

plt.plot(D_losses,label="D")

plt.xlabel("iterations")

plt.ylabel("Loss")

plt.legend()

plt.show()

生成器在不同阶段生成的效果

fig = plt.figure(figsize=(8,8))

plt.axis("off")

ims = [[plt.imshow(np.transpose(i,(1,2,0)), animated=True)] for i in img_list]

ani = animation.ArtistAnimation(fig, ims, interval=1000, repeat_delay=1000, blit=True)

HTML(ani.to_jshtml())

对比真实数据和fake数据

# Grab a batch of real images from the dataloader

real_batch = next(iter(dataloader))

# Plot the real images

plt.figure(figsize=(15,15))

plt.subplot(1,2,1)

plt.axis("off")

plt.title("Real Images")

plt.imshow(np.transpose(vutils.make_grid(real_batch[0].to(device)[:64], padding=5, normalize=True).cpu(),(1,2,0)))

# Plot the fake images from the last epoch

plt.subplot(1,2,2)

plt.axis("off")

plt.title("Fake Images")

plt.imshow(np.transpose(img_list[-1],(1,2,0)))

plt.show()

文章来源:https://www.toymoban.com/news/detail-406021.html

文章来源:https://www.toymoban.com/news/detail-406021.html

结论

从上面的结果来看,对于生成牙刷这样的场景这个很小的GAN网络已经可以完成的很好了。文章来源地址https://www.toymoban.com/news/detail-406021.html

到了这里,关于基于GAN的图像生成模型的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!