大家好,我是小F~

YOLO(You Only Look Once)是由Joseph Redmon和Ali开发的一种对象检测和图像分割模型。

YOLO的第一个版本于2015年发布,由于其高速度和准确性,瞬间得到了广大AI爱好者的喜爱。

Ultralytics YOLOv8则是一款前沿、最先进(SOTA)的模型,基于先前YOLO版本的成功,引入了新功能和改进,进一步提升性能和灵活性。

YOLOv8设计快速、准确且易于使用,使其成为各种物体检测与跟踪、实例分割、图像分类和姿态估计任务的绝佳选择。

项目地址:

https://github.com/ultralytics/ultralytics

其中官方提供了示例,通过Python代码即可实现YOLOv8对象检测算法模型,使用预训练模型来检测我们的目标。

而且对电脑需求也不高,CPU就能运行代码。

今天小F就给大家介绍三个使用YOLOv8制作的检测器,非常实用。

/ 01 /

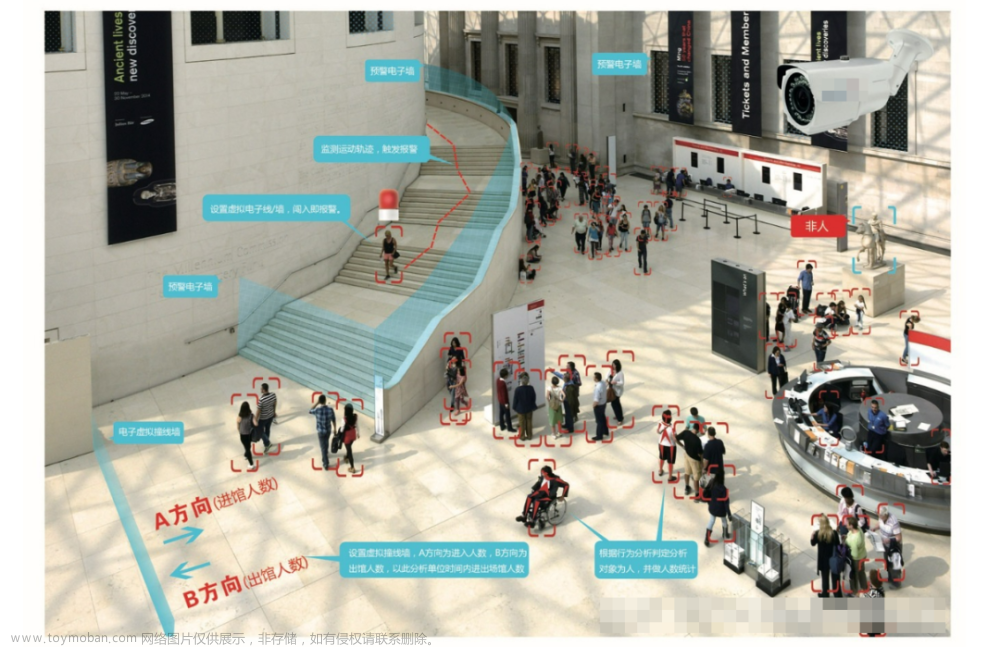

行人检测器

使用YOLOv8精确检测行人。

并且设定行人计数区域,实现实时计算区域内行人的数量。

这项技术可以制作一个,人员密集场所客流量统计监测系统。

非常适合火车站、地铁站、机场等场景,实现客流安全管理。

使用到Python版本即相关Python库。

Python 3.9.7

supervision 0.2.0

ultralytics 8.0.154

torchvision 0.13.0

opencv-contrib-python 4.8.0.74

opencv-python 4.8.0.74其中OpenCV库大家应该都不陌生。

supervision库是一个可重复使用的计算机视觉工具,帮助处理数据集、检测结果等工作。

可以降低我们学习计算机视觉的难度~

项目地址:

https://github.com/roboflow/supervision

接下来就先看一下全区域的行人检测代码吧!

import numpy as np

import supervision as sv

from ultralytics import YOLO

class CountObject():

def __init__(self, input_video_path, output_video_path) -> None:

# 加载YOLOv8模型

self.model = YOLO('yolov8s.pt')

# 输入视频, 输出视频

self.input_video_path = input_video_path

self.output_video_path = output_video_path

# 视频信息

self.video_info = sv.VideoInfo.from_video_path(input_video_path)

# 检测框属性

self.box_annotator = sv.BoxAnnotator(thickness=4, text_thickness=4, text_scale=2)

def process_frame(self, frame: np.ndarray, _) -> np.ndarray:

# 检测

results = self.model(frame, imgsz=1280)[0]

detections = sv.Detections.from_yolov8(results)

detections = detections[detections.class_id == 0]

# 绘制检测框

box_annotator = sv.BoxAnnotator(thickness=4, text_thickness=4, text_scale=2)

labels = [f"{self.model.names[class_id]} {confidence:0.2f}" for _, confidence, class_id, _ in detections]

frame = box_annotator.annotate(scene=frame, detections=detections, labels=labels)

return frame

def process_video(self):

# 处理视频

sv.process_video(source_path=self.input_video_path, target_path=self.output_video_path,

callback=self.process_frame)

if __name__ == "__main__":

obj = CountObject('demo.mp4', 'single.mp4')

obj.process_video()运行代码,结果如下。

发现检测效果还不错,目标的置信度都挺高的。

进行区域划分,编写算法,判断不同区域内的人员数量。

import numpy as np

import supervision as sv

from ultralytics import YOLO

class CountObject():

def __init__(self, input_video_path, output_video_path) -> None:

# 加载YOLOv8模型

self.model = YOLO('yolov8s.pt')

# 设置颜色

self.colors = sv.ColorPalette.default()

# 输入视频, 输出视频

self.input_video_path = input_video_path

self.output_video_path = output_video_path

# 多边形区域

self.polygons = [

np.array([

[540, 985],

[1620, 985],

[2160, 1920],

[1620, 2855],

[540, 2855],

[0, 1920]

], np.int32),

np.array([

[0, 1920],

[540, 985],

[0, 0]

], np.int32),

np.array([

[1620, 985],

[2160, 1920],

[2160, 0]

], np.int32),

np.array([

[540, 985],

[0, 0],

[2160, 0],

[1620, 985]

], np.int32),

np.array([

[0, 1920],

[0, 3840],

[540, 2855]

], np.int32),

np.array([

[2160, 1920],

[1620, 2855],

[2160, 3840]

], np.int32),

np.array([

[1620, 2855],

[540, 2855],

[0, 3840],

[2160, 3840]

], np.int32)

]

# 视频信息

self.video_info = sv.VideoInfo.from_video_path(input_video_path)

# 多边形区域

self.zones = [

sv.PolygonZone(

polygon=polygon,

frame_resolution_wh=self.video_info.resolution_wh

)

for polygon

in self.polygons

]

# 区域计数数量标识属性

self.zone_annotators = [

sv.PolygonZoneAnnotator(

zone=zone,

color=self.colors.by_idx(index),

thickness=6,

text_thickness=8,

text_scale=4

)

for index, zone

in enumerate(self.zones)

]

# 检测框绘制属性

self.box_annotators = [

sv.BoxAnnotator(

color=self.colors.by_idx(index),

thickness=4,

text_thickness=4,

text_scale=2

)

for index

in range(len(self.polygons))

]

def process_frame(self, frame: np.ndarray, i) -> np.ndarray:

# 检测

results = self.model(frame, imgsz=1280)[0]

detections = sv.Detections.from_yolov8(results)

detections = detections[(detections.class_id == 0) & (detections.confidence > 0.5)]

# 绘制区域、区域数量、检测框

for zone, zone_annotator, box_annotator in zip(self.zones, self.zone_annotators, self.box_annotators):

mask = zone.trigger(detections=detections)

detections_filtered = detections[mask]

frame = box_annotator.annotate(scene=frame, detections=detections_filtered, skip_label=True)

frame = zone_annotator.annotate(scene=frame)

return frame

def process_video(self):

# 处理视频

sv.process_video(source_path=self.input_video_path, target_path=self.output_video_path,

callback=self.process_frame)

if __name__ == "__main__":

obj = CountObject('demo.mp4', 'multiple.mp4')

obj.process_video()运行代码,结果如下。

这样每个区域的行人就计算展示出来了。

/ 02 /

小狗检测器

小狗检测器,防止小狗跳到沙发上,留下一团毛~

非常适合想要训练宠物,或者保护家具的宠物主人。

实现实时检测小狗,并且在小狗爬上沙发后触发报警。

其中报警推送可以设置成微信or短信,也是可以实现的。

具体代码如下。

import io

import os

import cv2

import telebot

import threading

import numpy as np

from PIL import Image

import pygame as pygame

from ultralytics import YOLO

from datetime import datetime, timedelta

# 设置报警机器人, 可以使用微信替代

# telegram_key = ""

# chat_id = ""

# bot = telebot.TeleBot(telegram_key)

# shared_frame = None

# 发消息

# def send_message(chat_id, message):

# bot.send_message(chat_id, message)

#

# 发图片

# def send_photo(chat_id, frame):

# img_rgb = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

# img = Image.fromarray(img_rgb)

#

# bio = io.BytesIO()

# bio.name = 'image.jpeg'

# img.save(bio, 'JPEG')

# bio.seek(0)

#

# bot.send_photo(chat_id, bio)

# 监听

# def telegram_listener():

# @bot.message_handler(func=lambda message: True)

# def echo_all(message):

# if message.text == "update":

# global shared_frame

# if shared_frame is not None:

# send_photo(chat_id, shared_frame)

# bot.polling()

# 报警机器人

# telegram_thread = threading.Thread(target=telegram_listener)

# telegram_thread.start()

# 时间计算

last_sent_time = datetime.now() - timedelta(seconds=15)

# 加载模型

model = YOLO("yolov8n.pt")

# 检测类别

classes = [

'person', 'bicycle', 'car', 'motorcycle', 'airplane', 'bus', 'train', 'truck',

'boat', 'traffic light', 'fire hydrant', 'stop sign', 'parking meter', 'bench',

'bird', 'cat', 'dog', 'horse', 'sheep', 'cow', 'elephant', 'bear', 'zebra',

'giraffe', 'backpack', 'umbrella', 'handbag', 'tie', 'suitcase', 'frisbee', 'skis',

'snowboard', 'sports ball', 'kite', 'baseball bat', 'baseball glove', 'skateboard',

'surfboard', 'tennis racket', 'bottle', 'wine glass', 'cup', 'fork', 'knife', 'spoon',

'bowl', 'banana', 'apple', 'sandwich', 'orange', 'broccoli', 'carrot', 'hot dog',

'pizza', 'donut', 'cake', 'chair', 'couch', 'potted plant', 'bed', 'dining table',

'toilet', 'tv', 'laptop', 'mouse', 'remote', 'keyboard', 'cell phone', 'microwave',

'oven', 'toaster', 'sink', 'refrigerator', 'book', 'clock', 'vase', 'scissors',

'teddy bear', 'hair drier', 'toothbrush'

]

# 初始化pygame, 加载报警音乐

pygame.init()

pygame.mixer.init()

alarm_played = False

pygame.mixer.music.load(os.path.abspath('alarm.mp3'))

COLORS = np.random.uniform(0, 255, size=(len(classes), 3))

# 加载视频

cap = cv2.VideoCapture('test_video1.mp4')

fps_camera = cap.get(cv2.CAP_PROP_FPS)

target_fps = 10

n = int(fps_camera / target_fps)

frame_counter, not_detected = 0, 0

while True:

ret, frame = cap.read()

if not ret:

break

shared_frame = frame

if frame_counter % n == 0:

# 检测

outs = model(frame, task='detect', iou=0.2, conf=0.3, show=True, save_conf=True, classes=[15, 16, 57, 59],

boxes=True)

# 算法部分, 计算狗和沙发的位置情况, 并且触发报警

pred_classes = [classes[int(i.item())] for i in outs[0].boxes.cls]

pred_bbox = [i.tolist() for i in outs[0].boxes.xywh]

length = len(pred_classes)

dog_boxes = []

couch_boxes = []

dog_flag, couch_bed_flag = 0, 0

for i in range(length):

if pred_classes[i] in ['dog', 'cat']:

dog_boxes.append((round(pred_bbox[i][0]), round(pred_bbox[i][1]),

round(pred_bbox[i][0] + pred_bbox[i][2]), round(pred_bbox[i][1] + pred_bbox[i][3])))

dog_flag = 1

if pred_classes[i] in ['couch', 'bed']:

couch_boxes.append((round(pred_bbox[i][0]), round(pred_bbox[i][1]),

round(pred_bbox[i][0] + pred_bbox[i][2]), round(pred_bbox[i][1] + pred_bbox[i][3])))

couch_bed_flag = 1

if alarm_played and (not dog_flag or not couch_bed_flag):

dog_boxes = [(0, 0, 0, 0)]

couch_boxes = [(0, 0, 0, 0)]

else:

for dog_box in dog_boxes:

for couch_box in couch_boxes:

if dog_box[3] < (couch_box[3] - ((couch_box[3] - couch_box[1]) * 0.5)) and (

(couch_box[0] < dog_box[0] < couch_box[2]) or (

couch_box[0] < dog_box[2] < couch_box[2])) and dog_flag and couch_bed_flag:

not_detected = 0

if not alarm_played:

# 发送消息

# if datetime.now() - last_sent_time >= timedelta(seconds=15):

# send_message(chat_id, "Dog has detected on couch!")

# last_sent_time = datetime.now()

# send_photo(chat_id, frame)

pygame.mixer.music.play(-1) # play in a loop

alarm_played = True

else:

if alarm_played:

not_detected += 1

if not_detected > 10:

not_detected = 0

pygame.mixer.music.stop()

alarm_played = False

couch_bed_flag = 0

dog_flag = 0

dog_boxes = [(0, 0, 0, 0)]

couch_boxes = [(0, 0, 0, 0)]

frame_counter += 1

if cv2.waitKey(1) & 0xFF == ord('q'):

break

cap.release()

cv2.destroyAllWindows()运行代码,结果如下。

使用预训练模型获取小狗和沙发的目标框,并且计算两者的关系,以此来触发报警。

/ 03 /

车辆检测器

这是一个交通监控系统的项目。

使用OpenCV和YOLOv8实现如下功能,实时车辆检测、车辆跟踪、实时车速检测,以及检测车辆是否超速。

跟踪代码如下,赋予每个目标唯一ID,避免重复计算。

import math

class Tracker:

def __init__(self):

# 存储目标的中心位置

self.center_points = {}

# ID计数

# 每当检测到一个新的目标id时, 计数将增加1

self.id_count = 0

def update(self, objects_rect):

# 目标的方框和ID

objects_bbs_ids = []

# 获取新目标的中心点

for rect in objects_rect:

x, y, w, h = rect

cx = (x + x + w) // 2

cy = (y + y + h) // 2

# 看看这个目标是否已经被检测到过

same_object_detected = False

for id, pt in self.center_points.items():

dist = math.hypot(cx - pt[0], cy - pt[1])

if dist < 35:

self.center_points[id] = (cx, cy)

# print(self.center_points)

objects_bbs_ids.append([x, y, w, h, id])

same_object_detected = True

break

# 检测到新目标,分配ID给新目标

if same_object_detected is False:

self.center_points[self.id_count] = (cx, cy)

objects_bbs_ids.append([x, y, w, h, self.id_count])

self.id_count += 1

# 按中心点清理字典, 删除不再使用的ID

new_center_points = {}

for obj_bb_id in objects_bbs_ids:

_, _, _, _, object_id = obj_bb_id

center = self.center_points[object_id]

new_center_points[object_id] = center

# 更新字典, 删除未使用的ID

self.center_points = new_center_points.copy()

return objects_bbs_ids这是检测代码,速度计算那部分代码有点小粗糙。

最好是能有相机标定这一过程,这样速度才会准确。

import cv2

import time

import numpy as np

from tracker import *

import pandas as pd

from ultralytics import YOLO

# 加载模型

model = YOLO('yolov8s.pt')

def RGB(event, x, y, flags, param):

if event == cv2.EVENT_MOUSEMOVE:

colorsBGR = [x, y]

print(colorsBGR)

cv2.namedWindow('RGB')

cv2.setMouseCallback('RGB', RGB)

cap = cv2.VideoCapture('Vid1.mp4')

my_file = open("coco.txt", "r")

data = my_file.read()

class_list = data.split("\n")

# print(class_list)

count = 0

speed = {}

area = [(225, 335), (803, 335), (962, 408), (57, 408)]

area_c = set()

# 跟踪算法

tracker = Tracker()

speed_limit = 62

while True:

ret, frame = cap.read()

if not ret:

break

count += 1

if count % 3 != 0:

continue

frame = cv2.resize(frame, (1020, 500))

results = model.predict(frame)

# print(results)

a = results[0].boxes.boxes

px = pd.DataFrame(a).astype("float")

# print(px)

list = []

# 使用YOLOv8的检测结果, 进行算法设计

for index, row in px.iterrows():

# print(row)

x1 = int(row[0])

y1 = int(row[1])

x2 = int(row[2])

y2 = int(row[3])

d = int(row[5])

c = class_list[d]

if 'car' in c:

list.append([x1, y1, x2, y2])

bbox_id = tracker.update(list)

for bbox in bbox_id:

x3, y3, x4, y4, id = bbox

cx = int(x3+x4)//2

cy = int(y3+y4)//2

results = cv2.pointPolygonTest(

np.array(area, np.int32), ((cx, cy)), False)

if results >= 0:

cv2.circle(frame, (cx, cy), 4, (0, 0, 255), -1)

cv2.putText(frame, str(id), (x3, y3), cv2.FONT_HERSHEY_COMPLEX,

0.8, (0, 255, 255), 2, cv2.LINE_AA)

cv2.rectangle(frame, (x3, y3), (x4, y4), (0, 0, 255), 2)

area_c.add(id)

now = time.time()

if id not in speed:

speed[id] = now

else:

try:

prev_time = speed[id]

speed[id] = now

dist = 2

a_speed_ms = dist / (now - prev_time)

a_speed_kh = a_speed_ms * 3.6

cv2.putText(frame, str(int(a_speed_kh))+'Km/h', (x4, y4),

cv2.FONT_HERSHEY_COMPLEX, 0.8, (0, 255, 255), 2, cv2.LINE_AA)

speed[id] = now

except ZeroDivisionError:

pass

# 检查速度是否超过速度限制

# if a_speed_kh >= speed_limit:

# # Display a warning message

# cv2.putText(frame, "Speed limit violated!", (440, 115),

# cv2.FONT_HERSHEY_TRIPLEX, 0.8, (255, 0, 255), 2, cv2.LINE_AA)

# # Display the message for 3 seconds

# start_time = time.time()

# while time.time() - start_time < 3:

# cv2.imshow("RGB", frame)

# if cv2.waitKey(1) & 0xFF == 27:

# break

cv2.polylines(frame, [np.array(area, np.int32)], True, (0, 255, 0), 2)

cnt = len(area_c)

cv2.putText(frame, ('Vehicle-Count:-')+str(cnt), (452, 50),

cv2.FONT_HERSHEY_TRIPLEX, 1, (102, 0, 255), 2, cv2.LINE_AA)

cv2.imshow("RGB", frame)

if cv2.waitKey(1) & 0xFF == 27:

break

# 刷新,释放资源

cap.release()

cv2.destroyAllWindows()运行代码,结果如下。

发现效果还不错~

/ 04 /

总结

以上操作,就是三个使用YOLOv8实现的计算机视觉项目。

当然我们还可以通过预训练模型实现其它功能。

如果预训练模型的检测效果在你要使用的场景不太好,那就是需要加加数据了~

相关文件及代码都已上传,公众号回复【YOLO】即可获取。

万水千山总是情,点个 👍 行不行。

推荐阅读

文章来源:https://www.toymoban.com/news/detail-776623.html

文章来源:https://www.toymoban.com/news/detail-776623.html

··· END ···文章来源地址https://www.toymoban.com/news/detail-776623.html

到了这里,关于使用OpenCV和YOLOv8制作目标检测器(附源码)的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!

![[C++]使用纯opencv部署yolov8旋转框目标检测](https://imgs.yssmx.com/Uploads/2024/04/858578-1.jpeg)